The notes below outline the steps I took to test two-way SSL from scratch using updated keytool functionality found in Java 7. Rather than use a commercial certificate authority like VeriSign (which costs real money), my notes show how to generate your own CA and all PKI artefacts using just the keytool command. These artefacts can subsequently be utilized for development / testing / private-network scenarios. Note keytool is simple a CLI / console program shipped with the Java JDK / JRE that wraps underling java security/crypto classes.

If you can follow these steps and understand the process, then transitioning to a commercial trusted certificate authority like VeriSign should be straightforward.

In my previous article http://todayguesswhat.blogspot.com/2012/07/weblogic-https-one-way-ssl-tutorial.html I state:

One-way SSL is the mode which most "storefronts" run on the internet so as to be able to accept credit card details and the like without the customer’s details being sent effectively in the clear from a packet-capture perspective. In this mode, the server must present a valid public certificate to the client, but the client is not required to present a certificate to the server.

With Two-way SSL trust is enhanced by now requiring both the server and the client present valid certificates to each other so as to prove their identity.

From an Oracle WebLogic Server perspective, two-way SSL enables the server to only* accept incoming SSL connections from clients whom can present a public certificate that can be validated based on the contents of the server’s configured trust store.

*Assuming WebLogic Server is configured with “Client Certs Requested And Enforced” option.

The actual certificate verification process itself is quite detailed and would make a good future blog post. RFC specifications of interest are RFC 5280 (which obsoletes RFC 3280) and RFC 2818 and RFC 6125.

WebLogic server can also be configured to subsequently authenticate the client based on some attribute (such as cn – common name) extracted from the client’s validated X509 certificate by configuring the Default Identity Asserter; this is commonly known as certificate authentication. This is not mandatory however - Username/password authentication (or any style for that matter) can still be leveraged on top of a two-way SSL connection.

Now let’s get on with it …

Why do we need Java 7 keytool support? Specifically for signing certificate requests, and also to be able to generate a keypair with custom x509 extension such as SubjectAlternativeName / BasicConstraints etc.

High-level, we need the following:

Custom Certificate Authority

Server Certificate signed by Custom CA

Client Certificate signed by Custom CA

Artifacts required for two-way SSL to support WebLogic server and various clients types (Browser / Java etc):

Server keystore in JKS format

Server truststore in JKS format

Client keystore in JKS format

Client truststore in JKS format

Client keystore in PKCS12 keystore format

Client truststore in PKCS12 format

CA certificate in PEM format

Note: Browsers and Mobile devices typically want public certificates in PEM format and keypairs (private key/public key) in PKCS12 format.

Java clients on the other hand generally use JKS format keystores

Steps below assume Linux zsh

Constants – edit accordingly

CA_P12_KEYSTORE_FILE=/tmp/ca.p12

CA_P12_KEYSTORE_PASSWORD=welcome1

CA_KEY_ALIAS=customca

CA_KEY_PASSWORD=welcome1

CA_DNAME="CN=CustomCA, OU=MyOrgUnit, O=MyOrg, L=MyTown, ST=MyState, C=MyCountry"

CA_CER=/tmp/ca.pem

SERVER_JKS_KEYSTORE_FILE=/tmp/server.jks

SERVER_JKS_KEYSTORE_PASSWORD=welcome1

SERVER_KEY_ALIAS=server

SERVER_KEY_PASSWORD=welcome1

SERVER_DNAME="CN=www.acme.com"

SERVER_CSR=/tmp/server_cert_signing_request.pem

SERVER_CER=/tmp/server_cert_signed.pem

SERVER_JKS_TRUST_KEYSTORE_FILE=/tmp/server-trust.jks

SERVER_JKS_TRUST_KEYSTORE_PASSWORD=welcome1

CLIENT_JKS_KEYSTORE_FILE=/tmp/client.jks

CLIENT_JKS_KEYSTORE_PASSWORD=welcome1

CLIENT_KEY_ALIAS=client

CLIENT_KEY_PASSWORD=welcome1

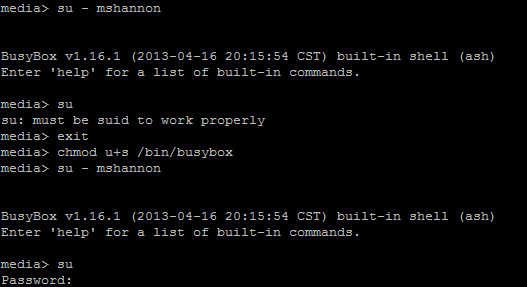

CLIENT_DNAME="CN=mshannon, OU=MyOrgUnit, O=MyOrg, L=MyTown, ST=MyState, C=MyCountry"

CLIENT_CSR=/tmp/client_cert_signing_request.pem

CLIENT_CER=/tmp/client_cert_signed.pem

CLIENT_JKS_TRUST_KEYSTORE_FILE=/tmp/client-trust.jks

CLIENT_JKS_TRUST_KEYSTORE_PASSWORD=welcome1

CLIENT_P12_KEYSTORE_FILE=/tmp/client.p12

CLIENT_P12_KEYSTORE_PASSWORD=welcome1

Verify version of Java

(/usr/java/jre/jre1.7.0_45)% export JAVA_HOME=`pwd`

(/usr/java/jre/jre1.7.0_45)% export PATH=$JAVA_HOME/bin:$PATH

(/usr/java/jre/jre1.7.0_45)% java -version

java version "1.7.0_45"

Java(TM) SE Runtime Environment (build 1.7.0_45-b18)

Java HotSpot(TM) 64-Bit Server VM (build 24.45-b08, mixed mode)

Create CA, Server and Client keystores

# -keyalg - Algorithm used to generate the public-private key pair - e.g. DSA

# -keysize - Size in bits of the public and private keys

# -sigalg - Algorithm used to sign the certificate - for DSA, this would be SHA1withDSA, for RSA, SHA1withRSA

# -validity - Number of days before the certificate expires

# -ext bc=ca:true - WebLogic/Firefox require X509 v3 CA certificates to have a Basic Constraint extension set with field CA set to TRUE

# without the bc=ca:true , firefox won't allow us to import the CA's certificate.

# look after the CA_P12_KEYSTORE_FILE - it will contain our CA private key and should be locked away!

keytool -genkeypair -v -keystore "$CA_P12_KEYSTORE_FILE" \

-storetype PKCS12 -storepass "$KEYSTORE_PASSWORD" \

-keyalg RSA -keysize 1024 -validity 1825 -alias "$CA_KEY_ALIAS" -keypass "$CA_KEY_PASSWORD" -dname "$CA_DNAME" \

-ext "bc=ca:true"

keytool -genkeypair -v -keystore "$SERVER_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$SERVER_JKS_KEYSTORE_PASSWORD" \

-keyalg RSA -keysize 1024 -validity 1825 -alias "$SERVER_KEY_ALIAS" -keypass "$SERVER_KEY_PASSWORD" -dname "$SERVER_DNAME"

keytool -genkeypair -v -keystore "$CLIENT_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$CLIENT_JKS_KEYSTORE_PASSWORD" \

-keyalg RSA -keysize 1024 -validity 1825 -alias "$CLIENT_KEY_ALIAS" -keypass "$CLIENT_KEY_PASSWORD" -dname "$CLIENT_DNAME"

Export CA certificate

# -rfc - means to output in PEM (rfc style) base64 encoded format, output will look like ----BEGIN.... etc

keytool -exportcert -v -keystore "$CA_P12_KEYSTORE_FILE" \

-storetype PKCS12 -storepass "$KEYSTORE_PASSWORD" \

-alias "$CA_KEY_ALIAS" -file "$CA_CER" -rfc

Generate certificate signing requests for client and server (to be supplied to CA for subsequent signing)

# The public certificates for the client and server keypairs created above are currently self-signed

# (such that, issuer = subject , private key signed its associated public certificate)

# For a production server we want to get our public certificate signed by a valid certificate authority (CA).

# We are going to use the customca we created above to sign these certificates.

# We first need to get a certificate signing request ready ...

keytool -certreq -v -keystore "$SERVER_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$SERVER_JKS_KEYSTORE_PASSWORD" \

-alias "$SERVER_KEY_ALIAS" -keypass "$SERVER_KEY_PASSWORD" -file "$SERVER_CSR"

keytool -certreq -v -keystore "$CLIENT_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$CLIENT_JKS_KEYSTORE_PASSWORD" \

-alias "$CLIENT_KEY_ALIAS" -keypass "$CLIENT_KEY_PASSWORD" -file "$CLIENT_CSR"

Sign certificate requests

keytool -gencert -v -keystore "$CA_P12_KEYSTORE_FILE" \

-storetype PKCS12 -storepass "$KEYSTORE_PASSWORD" \

-validity 1825 -alias "$CA_KEY_ALIAS" -keypass "$CA_KEY_PASSWORD" \

-infile "$SERVER_CSR" -outfile "$SERVER_CER" -rfc

keytool -gencert -v -keystore "$CA_P12_KEYSTORE_FILE" \

-storetype PKCS12 -storepass "$KEYSTORE_PASSWORD" \

-validity 1825 -alias "$CA_KEY_ALIAS" -keypass "$CA_KEY_PASSWORD" \

-infile "$CLIENT_CSR" -outfile "$CLIENT_CER" -rfc

Import signed certificates

# Once we complete the signing, the certificate is no longer self-signed, but rather signed by our CA.

# the issuer and owner are now different.

# Now we are ready to imported the signed public certificates back in to the keystores ...

# keytool prevents us from importing a certificate should it not be able to verify the full signing chain.

# As we leveraged our custom CA, we need to import the CA's public certificate in to our keystore as a

# trusted certificate authority prior to importing our signed certificate.

# otherwise - 'Failed to establish chain from reply' error will occur

keytool -import -v -keystore "$SERVER_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$SERVER_JKS_KEYSTORE_PASSWORD" \

-alias "$CA_KEY_ALIAS" -file "$CA_CER" -noprompt

keytool -import -v -keystore "$CLIENT_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$CLIENT_JKS_KEYSTORE_PASSWORD" \

-alias "$CA_KEY_ALIAS" -file "$CA_CER" -noprompt

keytool -import -v -keystore "$SERVER_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$SERVER_JKS_KEYSTORE_PASSWORD" \

-alias "$SERVER_KEY_ALIAS" -keypass "$SERVER_KEY_PASSWORD" -file "$SERVER_CER" -noprompt

keytool -import -v -keystore "$CLIENT_JKS_KEYSTORE_FILE" \

-storetype JKS -storepass "$CLIENT_JKS_KEYSTORE_PASSWORD" \

-alias "$CLIENT_KEY_ALIAS" -keypass "$CLIENT_KEY_PASSWORD" -file "$CLIENT_CER" -noprompt

Create trust stores

# for one-way SSL

# the trust keystore for the client needs to include the certificate for the trusted certificate authority that signed the certificate for the server

# for two-way SSL

# the trust keystore for the client needs to include the certificate for the trusted certificate authority that signed the certificate for the server

# the trust keystore for the server needs to include the certificate for the trusted certificate authority that signed the certificate for the client

# for two-way SSL connection, the client verifies the identity of the server and subsequently passes its certificate to the server.

# The server must then validate the client identity before completing the SSL handshake

# given our client and server certificates are both issued by the same CA, the trust stores for both will just contain the custom CA cert

keytool -import -v -keystore "$SERVER_JKS_TRUST_KEYSTORE_FILE" \

-storetype JKS -storepass "$SERVER_JKS_TRUST_KEYSTORE_PASSWORD" \

-alias "$CA_KEY_ALIAS" -file "$CA_CER" -noprompt

keytool -import -v -keystore "$CLIENT_JKS_TRUST_KEYSTORE_FILE" \

-storetype JKS -storepass "$CLIENT_JKS_TRUST_KEYSTORE_PASSWORD" \

-alias "$CA_KEY_ALIAS" -file "$CA_CER" -noprompt

Create PKCS12 formats for Android / Browser clients

# note - warning will be given that the customca public cert entry cannot be imported

# TrustedCertEntry not supported

# this is expected

# http://docs.oracle.com/javase/7/docs/technotes/guides/security/crypto/CryptoSpec.html#KeystoreImplementation

# As of JDK 6, standards for storing Trusted Certificates in "pkcs12" have not been established yet

keytool -importkeystore -v \

-srckeystore "$CLIENT_JKS_KEYSTORE_FILE" -srcstoretype JKS -srcstorepass "$CLIENT_JKS_KEYSTORE_PASSWORD" \

-destkeystore "$CLIENT_P12_KEYSTORE_FILE" -deststoretype PKCS12 -deststorepass "$CLIENT_P12_KEYSTORE_PASSWORD" \

-noprompt

Configuring WebLogic server for two-way SSL

cp "$SERVER_JKS_KEYSTORE_FILE" /u01/app/oracle/product/Middleware/wlserver_10.3/server/lib

cp "$SERVER_JKS_TRUST_KEYSTORE_FILE" /u01/app/oracle/product/Middleware/wlserver_10.3/server/lib

In order to leverage the above keystores, it is just a matter of connecting to the Administration Console, then expand Environment and select Servers.

Choose the server for which you want to configure the identity and trust keystores, and select Configuration > Keystores.

Change Keystores to be "Custom Identity and Custom Trust"

You would then fill in the relevant fields (keystore fully qualified path, type (JKS), and keystore access password). e.g.

Custom Identity Keystore: /u01/app/oracle/product/Middleware/wlserver_10.3/server/lib/server.jks

Custom Identity Keystore Type: JKS

Custom Identity Keystore Passphrase: welcome1

Custom Trust Keystore: /u01/app/oracle/product/Middleware/wlserver_10.3/server/lib/server-trust.jks

Custom Trust Keystore Type: JKS

Custom Trust Keystore Passphrase: welcome1

SAVE

Next, you need to enable the SSL Listen Port for the server:

[Home >Summary of Servers >XXX > ] Configuration > General.

SSL Listen Port: Enabled (Check)

SSL Listen Port: XXXX

SAVE

Next, you need to tell WebLogic the alias and password in order to access the private key from the Identity Store:

[Home >Summary of Servers >XXX > ] Configuration > SSL.

Identity and Trust Locations: Keystores

Private Key Alias: server

Private Key Passphrase: welcome1

SAVE

click Advanced at the bottom of the page ([Home >Summary of Servers >XXX > ] Configuration > SSL_

Set the Two Way Client Cert Behaviour attribute to "Client Certs Requested And Enforced"

Client Certs Not Requested: The default (meaning one-way SSL).

Client Certs Requested But Not Enforced: Requires a client to present a certificate. If a certificate is not presented, the SSL connection continues.

Client Certs Requested And Enforced: Requires a client to present a certificate. If a certificate is not presented, the SSL connection is terminated.

Check "Use JSSE SSL" box

Failure to check this box above will likely result in "Cannot convert identity certificate" error when restarting the managed server, and the HTTPS port won't be open for connections.

SAVE

Restart server.

Configuring Browser client

From Internet Explorer > Tools > Internet Options > Contents > Certificates

Import under the Trusted Root Certification Authorities tab the ca.pem file

- Place all certificate in the following store: "Trusted Root Certification Authorities"

It will popup Security Warning - You are about to install a certificate from a certification authority (CA) claiming to represent.....

Do you want to install this certificate: Yes

Import under the Personal tab the client.p12 file

It will popup with 'To maintain security, the private key was protected with a password'

Supply the password for the private key: welcome1

- Place all certificate in the following store: "Personal"

-----------------

From Firefox > Tools > Options > Advanced > Encryption > View Certificates

From the "Authorities" tab , import ca.pem

check "Trust this CA to identify websites"

ok.

(It will get listed under the organization "MyOrg" in the tree based on the certificate dname that was leveraged)

From the "Your Certificates" tab, import client.p12

Enter the password that was used to encrypt this certificate backup: welcome1

It should state "Successfully restored your security certificate(s) and private key(s)."

Accessing a server configured for two-way SSL from a Browser

IE - May automatically supply the certificate to the server

Firefox -

"This site has requested that you identify yourself with a certificate"

"Choose a certificate to present as identification"

x Remember this decision

Accessing a server configured for two-way SSL from a Java client

Invoke Java with explicit SSL trust store elements set (to trust server's certificate chain) ...

System.setProperty("javax.net.ssl.trustStore", "/C:/Users/mshannon/Desktop/client-trust.jks");

System.setProperty("javax.net.ssl.trustStoreType", "JKS");

System.setProperty("javax.net.ssl.trustStorePassword", "welcome1");

Otherwise will see...

"javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target"

Given we are using two-way SSL, we must also specify the client certificate details ...

System.setProperty("javax.net.ssl.keyStore", "/C:/Users/mshannon/Desktop/client.jks");

System.setProperty("javax.net.ssl.keyStoreType", "JKS");

System.setProperty("javax.net.ssl.keyStorePassword", "welcome1");

Otherwise will see...

"javax.net.ssl.SSLHandshakeException: Received fatal alert: bad_certificate"

Unknown Information – Is it possible through System properties to specify which key (based on alias) to use from the client keystore, and also can a password be provided for such a key (alias)?

Currently we are relying on the fact that the client keystore just contains the one key-pair and that key's alias password entry matches the keystore password!

Configuring WebLogic Server to allow authentication using the client certificate

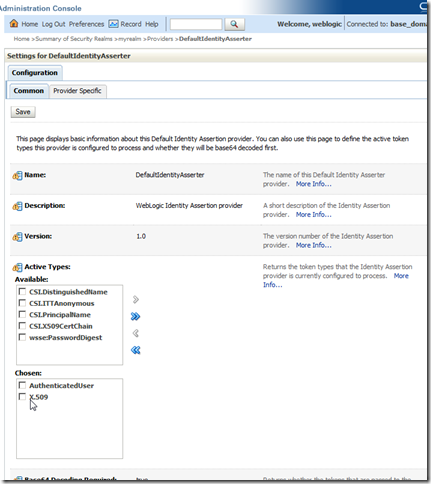

The above steps should have resulted in two-way SSL transport security. WebLogic however can also be configured to extract the client username from the client supplied public certificate and map this to a user in the identity store. To do this, we need to configure Default Identity Asserter to allow authentication using the client certificate. The deployed web application must also allow client certificate authentication as a login method.

Configuring Default Identity Asserter to support X.509 certificates involves:

1) Connecting to the WebLogic Server Administration Console as an administrator (e.g. weblogic user)

2) Navigating to appropriate security realm (e.g. myrealm)

3) Under the Providers tab and Authentication sub-tab selecting DefaultIdentityAsserter (WebLogic Identity Assertion provider)

4) On the Settings for DefaultIdentityAsserter page under the Configuration tab and Common sub-tab choosing X.509 as a supported token type and clicking Save.

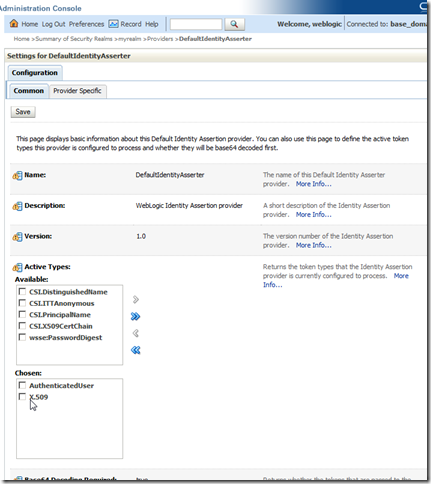

5) On the Settings for DefaultIdentityAsserter page under the Configuration tab and Provider-Specific sub-tab enabling the Default User Name Mapper and choosing the appropriate X.509 attribute within the subject DN to leverage as the username and correcting the delimiter as appropriate and clicking Save.

6) Restarting the WebLogic Server.

The deployed web application must also list CLIENT-CERT as an authentication method in the web.xml descriptor file:

<login-config>

<auth-method>CLIENT-CERT</auth-method>

</login-config>